Ever since Douglas Englebart developed the fist mouse prototype in 1963, people have tried to find better ways to interact with computers. Nearly 50 years later, almost all work done on a computer is still done with a mouse.

Of course, it would be nice to simply talk to a computer screen and have it not only type for us but recognize commands. This is already happening (and has been for many years now with companies like Nuance, even in robotic toys like Pleo who can recognize voice commands and react according to internal software).

What is the next step?

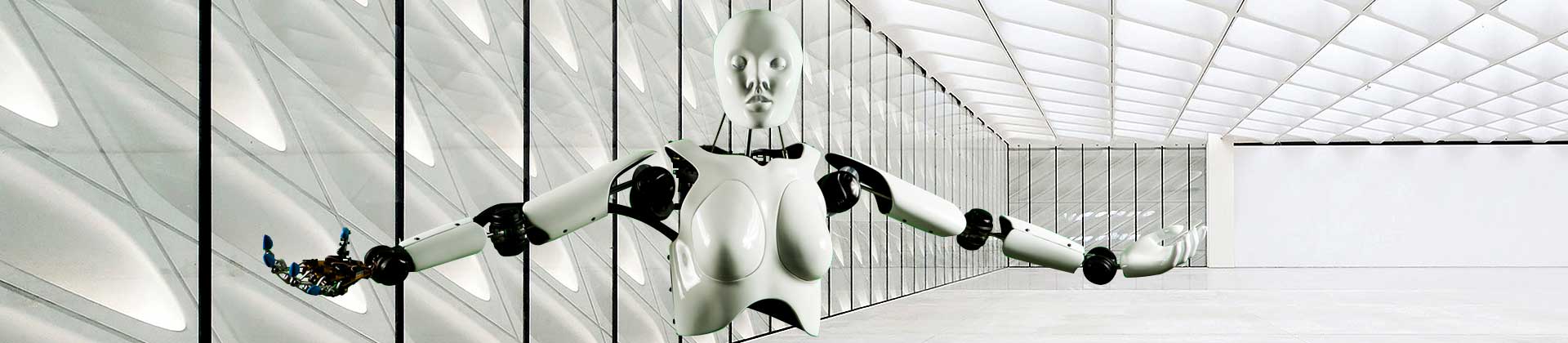

Bioloids, bipedal robots, recent major advances in the number and complexity of robots that walk and are capable of existing in a home environment, not just the laboratory.

Talking AT a robot is one way, as mentioned above, to control it. But what about talking WITH a robot? What would be necessary for you, as a human, to understand what the robot is trying to tell you?

A lot of work has been done in this area of human-machine interface. Now that we have the processing power, computer vision systems, and extremely talented research teams the direction is logical: Emotions. Expressions. A Face, not just a screen.

Therein lies the challenge. Animatronic faces have been around since Walt Disney and his Imagineering team created the first audio-animatronic figure, Abraham Lincoln, for the 1694 New York World’s Fair. While it was an amazing leap in entertainment and education, the face was very simplistic. Mouth open / close, eyes left/right, and so on.

To actually create emotions is not simple. There are roughly 52 muscles in a human face that create subtle motions that, as it turns out, are very critical to re-create in a robot face. There are many attempts at doing this. The issue lies in the fact that if you are trying to create absolute realism and miss even a small, subtle part of that motion it becomes terrifying, not entertaining. And certainly NOT something you would want to be next to.

The solution is the Mecha ToMoMi head from Custom Entertainment Solutions. Right now Technion Institute (having the talent and standards exceeding even M.I.T.) is using the ToMoMi head to study human interactions to a robot face. The head is sculpted intentionally “fake”, as opposed to trying to be absolutely realistic. The face is perfectly white, also intentional to move farther away from a “real” human face. The back of the head is open, not covered in hair or some other attempt at realism. Using this approach, scientists can program in emotions easily and avoid the “uncanny valley”. The ultimate result is a very emotive head, with CMOS supermicro color video cameras in each eye, that is fit for a bipedal robot.

No more mouse.

No more talking AT the robot.

The future is talking WITH a robot that is pleasant to look at and interact with.

Stay tuned….

2011